Designing clarity at scale: How replay transformed customer support workflows

A case study using jobs-to-be-done and the double diamond to solve context gaps in CX

-

Name

Replay - Session tracking, behavioral analytics

- Business

-

My Role

End-to-end Product Design & Leadership

-

Deliverables

UX Strategy, UX/UI Design, Validation, Cross-functional Artifacts, Post Launch

- View Replay Figma project

- View Replay FigJam project

Overview

Replay was a net new feature I led from concept to delivery at a CX software startup -- designed in direct response to support team challenges surfaced by our enterprise customer, Nordstrom.

By grounding the feature in cross-role JTBD research and real-world escalation logs, we turned fragmented support struggles into a unified product insight layer. Post-launch, Replay led to:

Replay not only improved support efficiency for customers — it helped elevate our product positioning as a diagnostic CX platform, unlocking expansion conversations across additional enterprise accounts.

Discovery to delivery

Discovery

Identified friction points through research analysis and interviews. JTBD helped reveal what users were really trying to achieve.

Define

Synthesized insights into key problems and prioritized them. JTBD guided problem framing and design focus.

Explore

Ideated and prototyped solutions to test how Replay could improve resolution. JTBD ensured alignment with user goals.

Deliver

Delivered end-to-end UX, prototypes, UI components, and design specs—all tied to measurable impact

Uncovering CX struggle through behavior and intent

I used mixed methods to triangulate the underlying struggles:

Discovery outcome

This phase validated a clear opportunity to build a shared visibility layer for CX work — one that removes guesswork and exposes the customer’s journey as behavioral evidence, not hearsay. This informed our product brief and paved the way for the Define phase.

Key insight themes

-

Lack of behavioral context stalls resolution

Struggle: Agents and CX teams often received vague or incomplete customer complaints. -

Escalations stem from internal blind spots

Struggle: Developers and product managers lacked visibility into what the customer experienced before bugs were reported. -

Manual reconstruction is error-prone and time-consuming

Struggle: CX and support agents manually pieced together customer journeys from logs, CRM notes, or multiple tools. -

Analytics miss the human story

Struggle: Quantitative tools (dashboards, funnels) failed to explain why users struggled. -

Frontline support workarounds mask systemic issues

Struggle: Agents developed their own hacks (e.g. screen shares, mock logins) to resolve tickets.

The JTBDs

Core JTBD

JTBD: “When an issue is raised by a customer or detected in analytics, I want to see exactly what the user did, so I can understand the issue clearly and resolve it quickly without guesswork or escalation.”

Struggles

- CX teams relied on stitching together fragmented data sources (chat logs, support tickets, backend logs, product analytics).

- Context was missing or delayed, forcing escalations or internal Slack threads.

- Guesswork and assumptions led to slower resolution and poor customer experiences.

JTBD: Support Agent (SA)

JTBD: When investigating bugs or regressions, I want to replay the exact steps users took, so I can debug faster without reproducing manually.

Motivations

- Reduce time-to-resolution.

- Deliver confident, accurate responses on the first touch.

- Increase CSAT and deflection of unnecessary escalations.

JTBD: CX Analyst

JTBD: “When support patterns emerge or a new release drops, I want to investigate sessions in bulk, so I can spot usability or workflow problems and influence product fixes with evidence.”

Motivations

- Drive systemic improvements and remove UX friction.

- Detect usability pain points early.

- Improve top call drivers and reduce volume through insights.

JTBD: Product Manager

JTBD: “When CX or support flags recurring friction points, I want to validate the impact by seeing real user sessions, so I can prioritize confidently and advocate for fixes with stakeholders.”

Motivations

- Validate and quantify customer pain with behavioral evidence

- Confidently prioritize product backlog items/li>

- Confidently prioritize product backlog items

- Close the loop with CX and support teams using hard evidence

JTBD: Developer

JTBD: “When a bug is assigned to me, I want to replay what the user did leading up to the error, so I can reproduce the issue and fix it faster without guessing or recreating edge cases.”

Motivations

- Reproduce bugs reliably and reduce fix time.

- Spend less time decoding vague Jira tickets.

- Improve velocity and code quality.

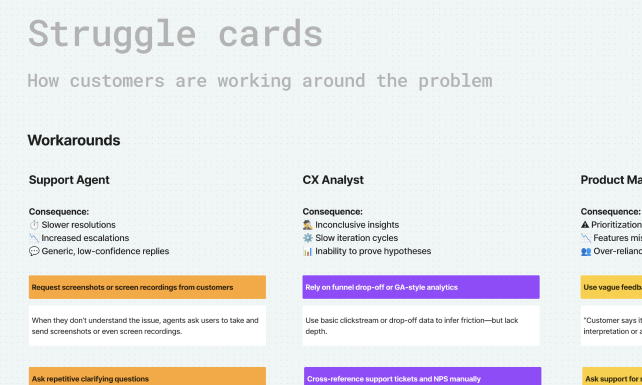

Define Phase — Framing the Right Problem

After uncovering deep behavioral struggles across roles in the Discover phase, the Define phase helped us narrow in on the real problem. We synthesized dozens of quotes and interactions using the JTBD framework to surface role-specific struggles, identify tool-based workarounds, and quantify unmet outcomes.

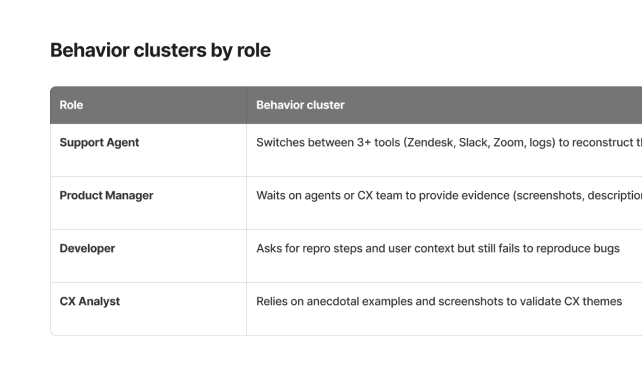

What we saw in Discovery

Each role — Support Agent, Product Manager, Developer, CX Analyst — had to stitch together the user experience manually. Agents copied logs into Slack. PMs relied on secondhand screenshots. Developers asked for Zoom calls. These were not just workflow issues — they were friction points on the JTBD timeline.

Figjam

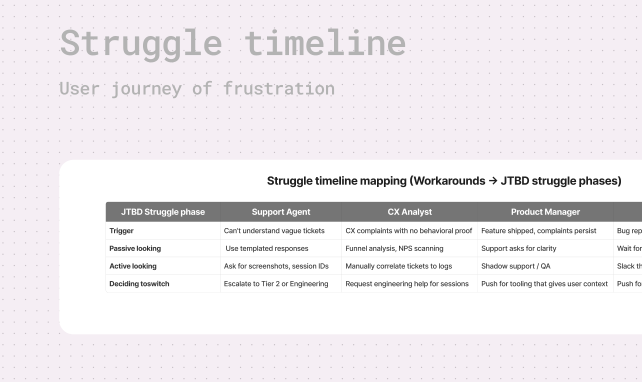

Mapping JTBD struggles

Using the JTBD struggle timeline, we placed each behavior in context. We could now see when and why users felt increasing frustration — and what moved them from tolerating the problem to seeking a new solution.

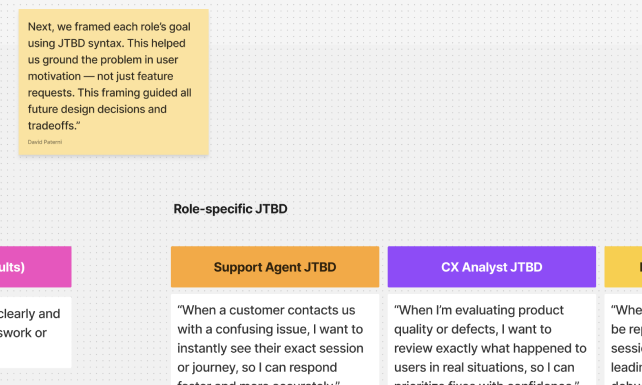

FigjamRole specific JTBD

Next, we framed each role’s goal using JTBD syntax. This helped us ground the problem in user motivation — not just feature requests. This framing guided all future design decisions and tradeoffs.

- Support Agent

- CX Analyst

- Product Manager

- Developer

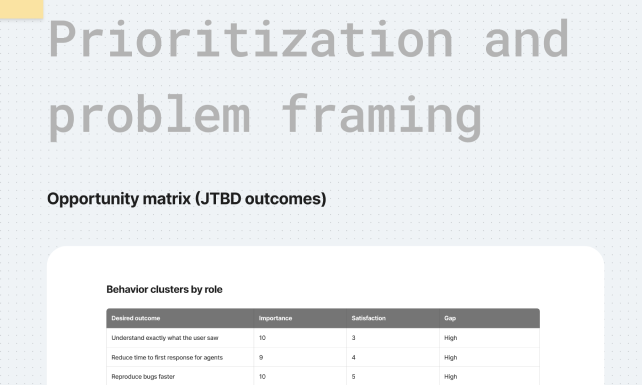

The underserved opportunity

By scoring desired outcomes, we identified a clear pattern: the ability to visually understand the user’s experience — quickly and without switching tools — was highly important and low satisfaction. This was our design opportunity.

FigjamThe before state

We visualized the before-state using struggle cards. Each one tells a story of tool overload, time waste, and unnecessary effort — all symptoms of an unmet job.

Figjam

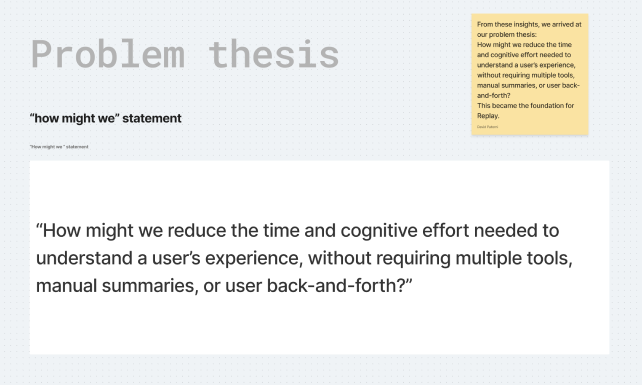

Defining the problem

From these insights, we arrived at our problem thesis: How might we reduce the time and cognitive effort needed to understand a user’s experience, without requiring multiple tools, manual summaries, or user back-and-forth? This became the foundation for Replay.

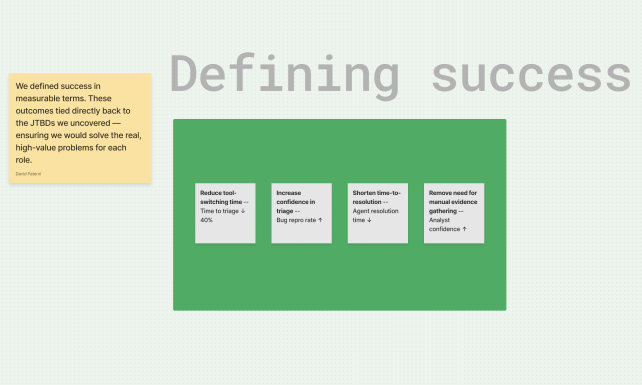

FigjamDefining success

Lastly, we defined success in measurable terms. These outcomes tied directly back to the JTBDs we uncovered — ensuring we would solve the real, high-value problems for each role.

- Reduce tool-switching time

- Increase confidence in triage

- Shorten time-to-resolution

- Remove need for manual evidence gathering

What we defined: A clear opportunity, a shared problem, and a focused path forward

Replay was no longer just a feature idea — it became a strategic enabler of fast, accurate, and collaborative issue resolution.

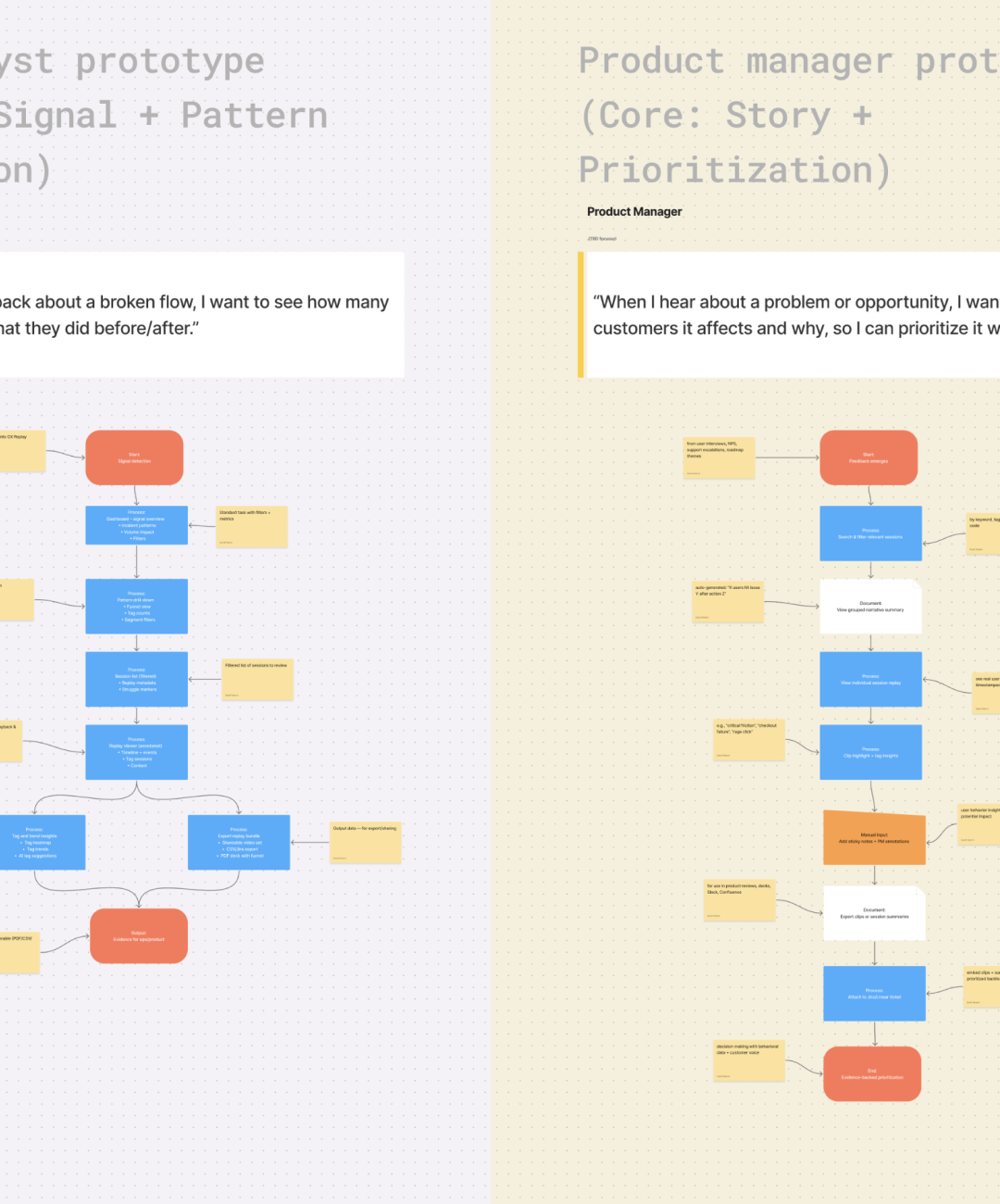

Framing and testing potential solutions with cross-functional teams

After mapping JTBD and pain signals across roles, we explored prototypes that addressed their most critical moments of struggle. Replay was a net-new feature, so we worked closely with Nordstrom’s CX, product, and engineering teams to validate desirability, feasibility, and usability before building.

Exploration methods

We grounded solution testing in cross-functional feedback

JTBD aligned prototypes

Role specific solution paths

|

Role

|

Prototype tested

|

JTBD trigger addressed

|

|---|---|---|

| Support Agent | Replay timeline w/ escalation bookmarks | I can’t explain what happened on this call” |

| CX Analyst | Query/tag filtering + batch Replay viewer | “I’m seeing a spike in refunds but no pattern yet” |

| Product Manager | Replay + session metadata in journey tools | “I don’t know what experience caused the drop” |

| Developer | Event-based data architecture testing | “I need to know if this breaks our infrastructure” |

Speed + signals

Agents need speed. Analysts need signal. PMs need story.

Entry points

Entry points into Replay must reflect each role’s workflow.

When vs. how

“When to replay” was as important as “how to replay”

Hidden blockers

Storage and cost surfaced as hidden blockers—developer input early saved months

What we learned

Each role’s expectations around “replay” were radically different.

Agents prioritized speed-to-resolution and wanted replays that aligned with escalation bookmarks. Analysts needed signal clarity—tools to batch-analyze spikes and tag patterns across sessions. PMs, by contrast, saw Replay as a narrative tool to explain unknown drops in the user journey.

These differences revealed that Replay could not be a single modal experience—it had to adapt based on workflow context. Additionally, developer feedback surfaced a critical technical constraint: storing full replays by default was not sustainable. By shifting to a trigger-based architecture early, we preserved feasibility without compromising value.

Delivering clarity, speed, and alignment at scale

I led the design and launch of CX Replay—a net-new feature built from scratch to accelerate issue resolution and improve cross-team alignment. Grounded in the Double Diamond and JTBD frameworks, I delivered production-ready UX flows, prototypes, UI components, filtering logic, and full design specs—shaped by real user needs and validated by results.

Support agents resolved tickets faster: Replay gave agents instant context—eliminating the need to ask users to reproduce steps or dig through logs manually.

Fewer tickets escalated to engineering: Product managers and analysts used Replay to triage bugs more effectively—flagging duplicates and eliminating false positives.

CX analysts diagnosed issues quicker: Replay surfaced event sequences and user paths that previously required stitching together logs, screenshots, and call transcripts.

Developers got what they needed—faster: Engineers used Replay links embedded in Jira tickets to directly view what happened, reducing time spent reproducing edge cases.

Visualizing delivery

Screens and artifacts of Replay’s key flows, design decisions, and supporting artifacts from concept to launch.